Each week we’ll gather headlines and tips to keep you current with how generative AI affects PR and the world at large. If you have ideas on how to improve the newsletter, let us know!

What You Should Know

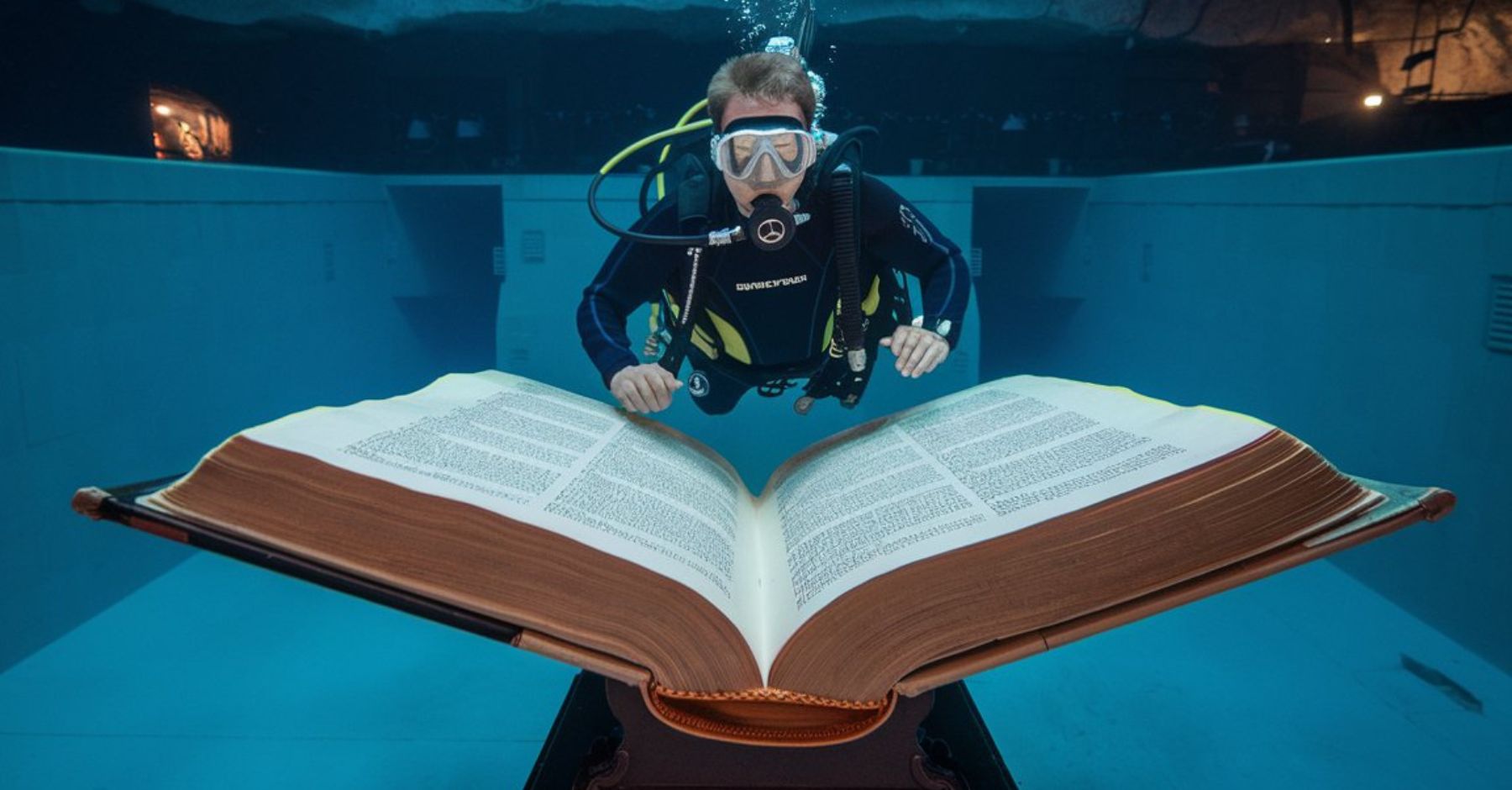

How Deep Is Your Research?

If you’re counting at home, Perplexity makes three AI companies that call a product “Deep Research.” On Friday, the company behind the search tool released its version of Deep Research — not to be confused with OpenAI’s Deep Research, Google’s Deep Research, Grok’s DeepSearch, and certainly not with DeepSeek, the Chinese AI platform that governments are banning over privacy concerns.

These tools go beyond basic search, combining internet retrieval with AI reasoning models to deliver more insights, context, and data. Instead of just listing sources, they generate long, well-thought-out reports, summarize key findings, and draw connections between disparate pieces of information, presenting results with much more … well … depth than traditional large language models. Deep Research (all three of them!) aims to produce academic-level research in minutes rather than hours.

For communicators, that means faster access to background information, competitive analysis, and industry trends. Deep Research helps with complex tasks like conducting due diligence on a rebrand, auditing brand perception, or vetting potential partners. However, even though reasoning models “think” longer than traditional LLMs, they still require human oversight to make sure the logic is sound and the sources are legitimate.

Elsewhere …

- New York Times Goes All-in on Internal AI Tools

- Researchers Are Training AI to Interpret Animal Emotions

- ‘Hopeless’ to Potentially Handy: Law Firm Puts AI to the Test

- The Hottest AI Models, What They Do, and How to Use Them

- Kansas Researchers Developing AI-boosted Program to Help Teach Writing Skills to Students With Disabilities

Tips and Tricks

Use the same thread

Use the same thread

What’s happening: Newer LLMs have larger context windows, meaning they remember and can reference more of your conversation than they used to. For instance, one of ChatGPT’s early models, GPT-3.5 Turbo, had a context window of 16,385 tokens. Its current flagship model, GPT-4o, can handle 128,000 tokens. Other models, like Claude 3.5, reach as high as 200,000 tokens — about 500 pages of material.

Why it matters: It used to be rather easy to overload a thread to the point where it would start forgetting context from early in the conversation, but now we can get more use out of every thread.

Try this: Instead of starting a new conversation for every request, keep related prompts in the same thread. Say you’re working on a new product announcement and you’ve uploaded a bunch of context about how the tool works, its effect on the market, and what it’s capable of. Use the same thread to produce several pieces of content like a press release, white paper, blog post, and social media posts. This keeps everything consistent and saves time by letting the model build on previous responses.

Quote of the Week

“These tools are going to be built one way or another, whether it’s by this team or somebody else. My hope is that, by creating a space for connecting [journalists and software developers], it can maybe be built in a much more responsible way.”

— Benjamin Toff, Director of the Minnesota Journalism Center, to MinnPost on a hackathon that benefits news outlets

How Was This Newsletter?