Headlines You Should Know

Using AI to Combat Misinformation

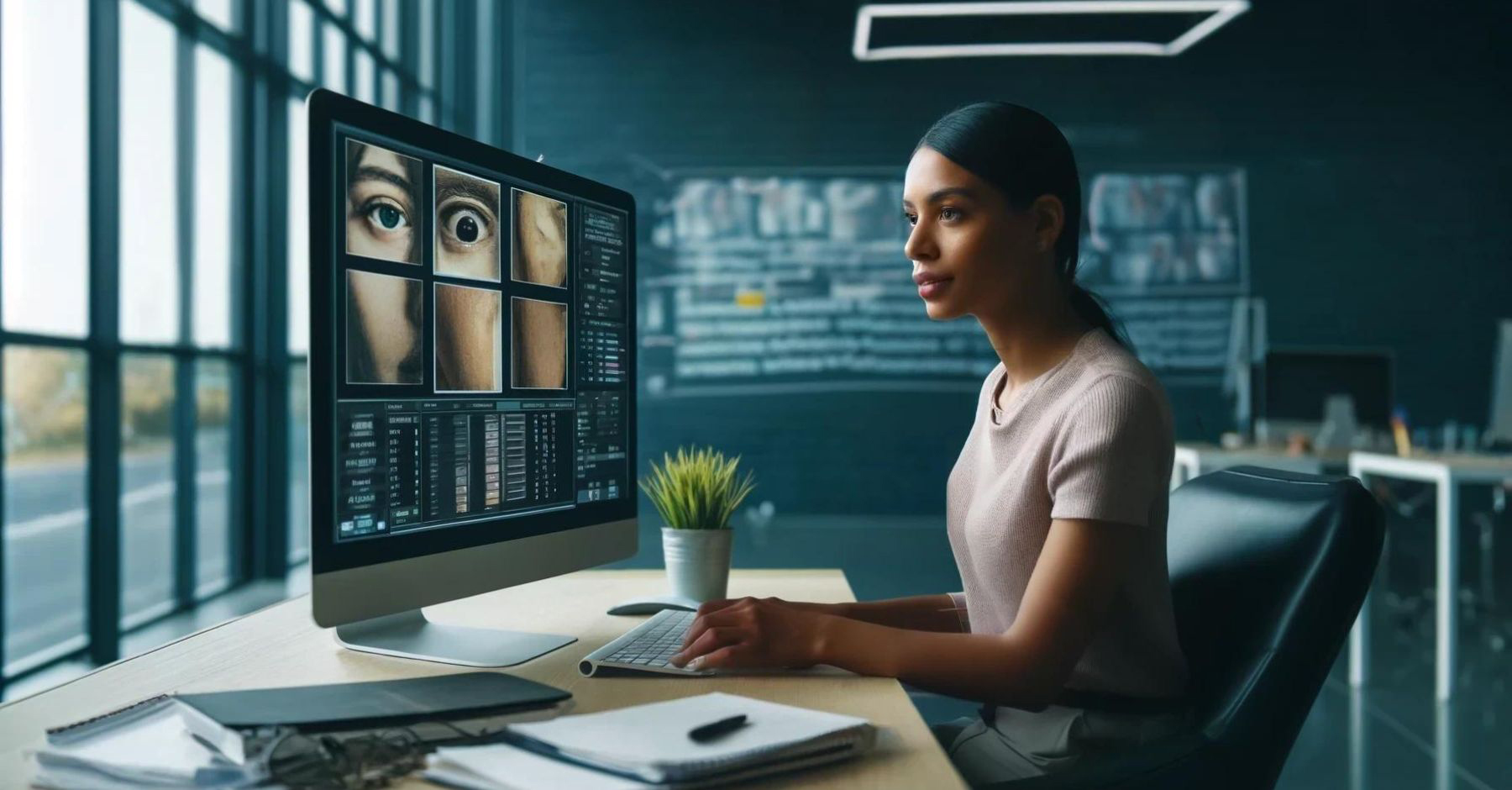

Last week, the United Nations held its annual AI for Good Summit, which focused on fighting AI misinformation with AI. Tech companies are also trying to raise awareness of the issue and keep their innovations from being used for harm.

Microsoft warns that Russian influence operations are using AI to target the Paris Olympics, and Google revealed a staggering surge in AI-generated image misinformation. Last week, OpenAI published a report detailing its efforts to stop threat actors from abusing ChatGPT, including specific case studies.

For communications professionals, AI misinformation is a new occupational hazard. These campaigns aren’t reserved just for politics or cyberattacks — bad actors are using AI to create chaos just because they want to seed mass distrust. So how should you combat this, both for your own awareness and to maintain credibility for the brand(s) you represent?

AI tools can help you identify what’s real and what’s not. Polygraf and AI or Not detect AI-generated content in writing and images, respectively, and Otherweb detects and filters misinformation in media content. But these platforms are only one piece of the puzzle.

Seek regular training so you and your team can better recognize and respond to AI-generated misinformation. By understanding how bad actors work with AI, you can mitigate the impact of AI-generated misinformation, and ensure that you’re only sharing trustworthy and credible content with your audience.

Elsewhere …

- OpenAI is Making ChatGPT Cheaper for Schools and Nonprofits

- Can an AI Version of the Dead Help with Grief?

- PODCAST: Will AI-written Media Pitches Ever Be Good Enough?

- The Uncanny Rise of the World’s First AI Beauty Pageant

- Google Makes Adjustments to AI Overviews After Rocky Rollout

Tips and Tricks

How to make your content more relatable

How to make your content more relatable

What’s happening: AI can quickly create lots of content — and not necessarily good content. By using AI tools responsibly, you can develop high-quality content efficiently without sounding robotic. One way to do this is by adding a step to your process: asking the AI tool to insert metaphors that make complex topics more relatable.

Why it matters: AI’s takeover leads experts to believe that 90% of online content will be AI-generated by 2026. You want your content to be unique and of high quality, so it resonates with your audience.

Try it out: After you’ve developed a piece of content, review it with the aim of identifying specific topics that may be too abstract, technical, or otherwise difficult for the reader to understand. Ask the AI tool to add a metaphor that simplifies the topic for the reader. Of course, you’ll need to take a step back and think about whether the metaphor actually applies to that particular topic before you include it in your final draft.

Quote of the Week

“Generative AI skills are in demand because few people have learned how to truly master generative AI. But these people who have mastered it can be anyone. This is not somebody you have to hire because they got their master’s at MIT. You just need to do one thing: Talk to it like a human.”

— Conor Grennan, Chief AI Architect at NYU’s Stern School of Business, in his latest newsletter